Roy T. Forestano

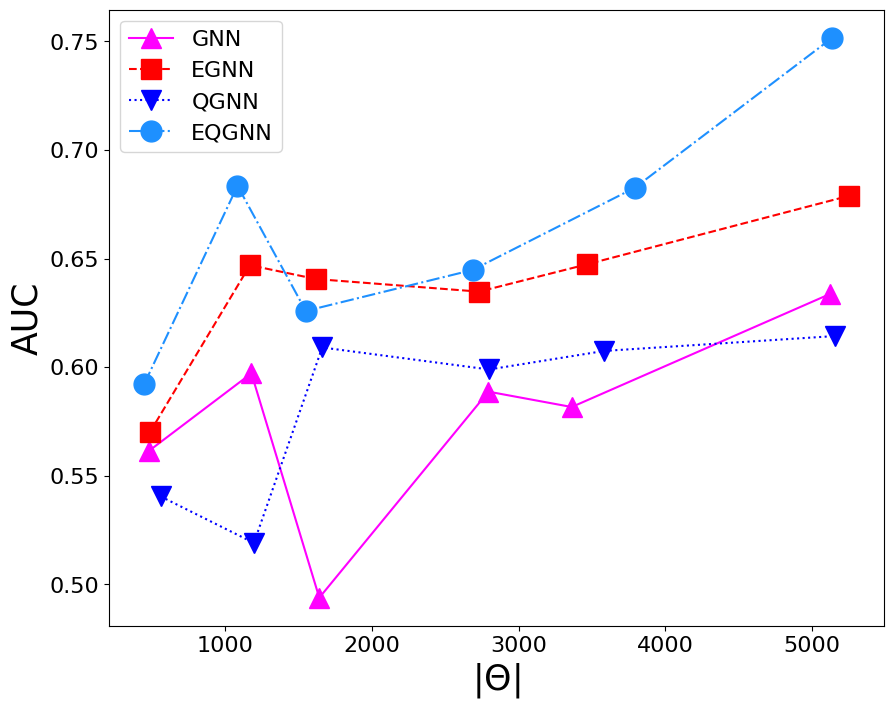

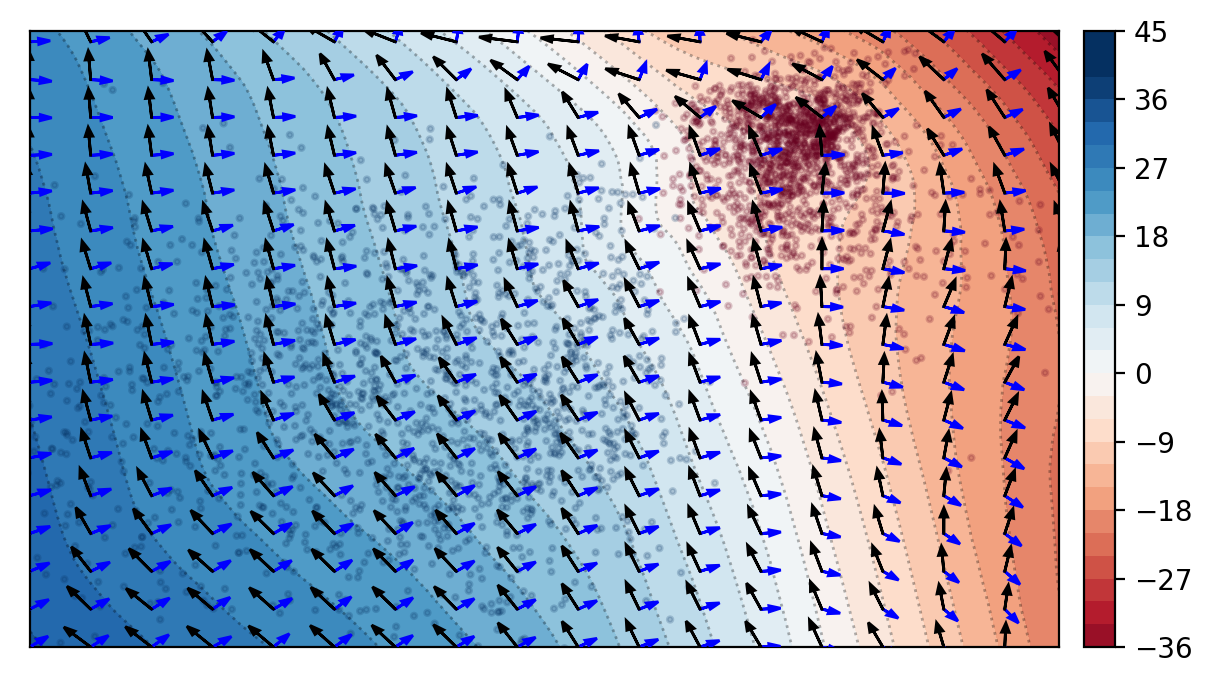

Welcome! I am a research scientist interested in machine learning (ML) and artificial intelligence (AI), quantum machine learning (QML), and interpretability.

In 2021, I graduated from Boston College (BC) with a B.S. in physics and a B.S. in mathematics. In 2025, I earned a Ph.D. in physics from the University of Florida (UF), where I worked on developing and applying interpretable classical and quantum ML methods to high-energy and astrophysics.

Outside of research, I enjoy olympic weightlifting, watching and playing sports, cooking, reading, listening to podcasts, and being outdoors.

news

| Aug 11, 2025 | Graduated with a Ph.D. in physics from the University of Florida |

|---|---|

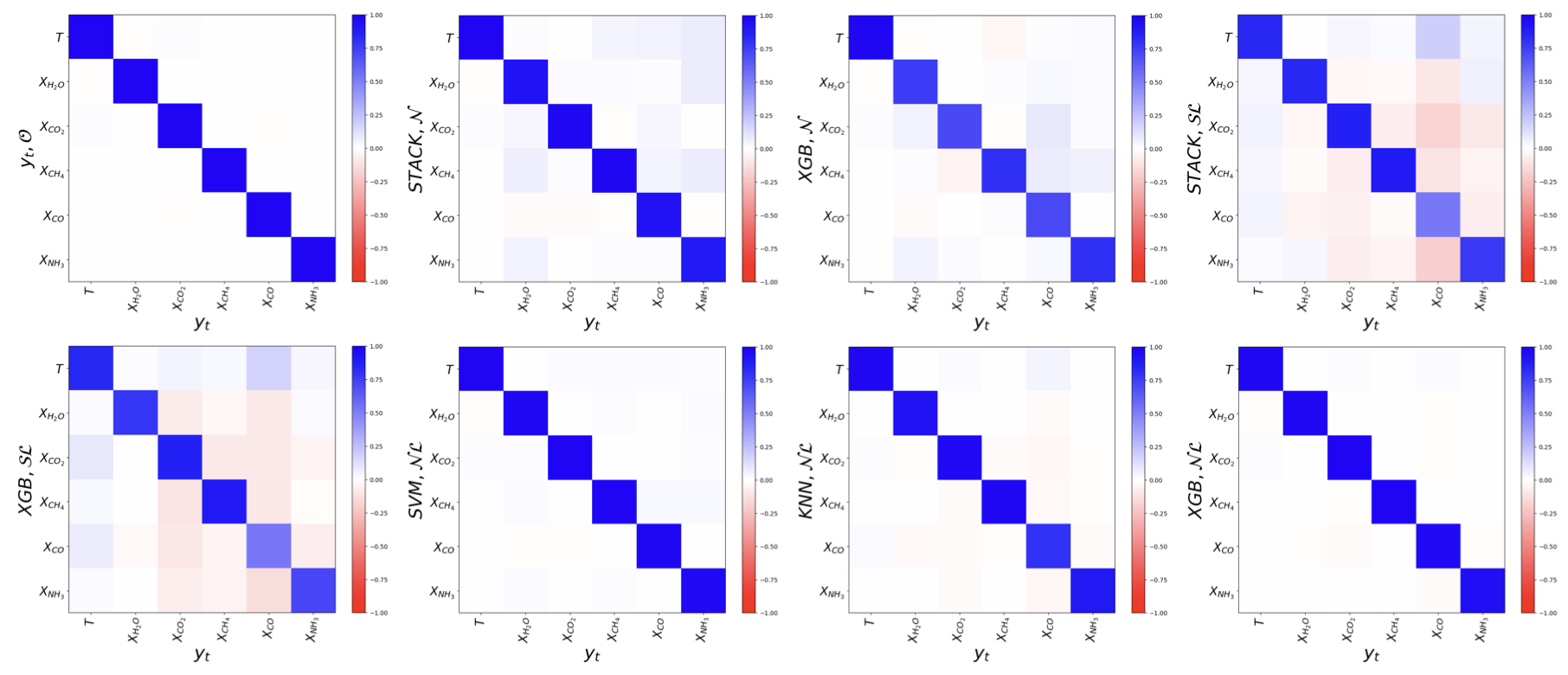

| Aug 7, 2025 | Submitted Supervised Machine Learning Methods with Uncertainty Quantification for Exoplanet Atmospheric Retrievals from Transmission Spectroscopy to AAS Journals. |

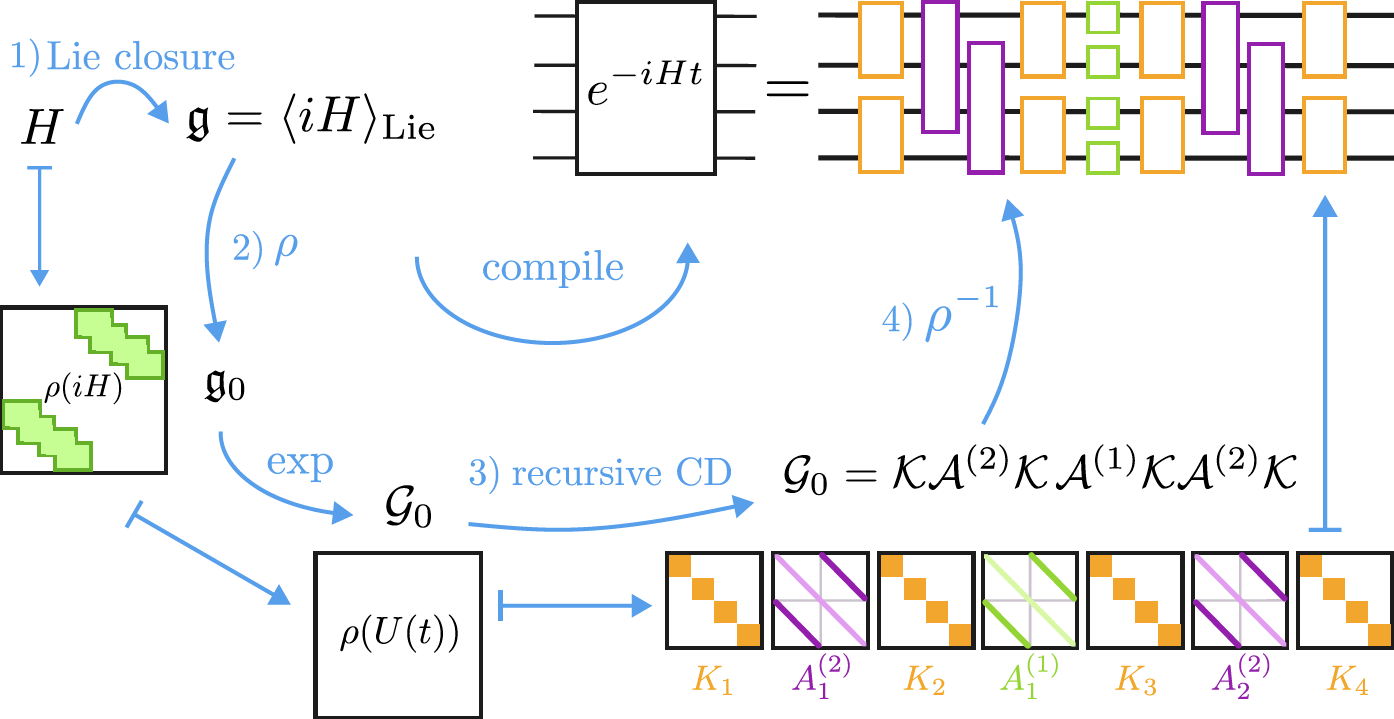

| Aug 10, 2024 | 2024 Los Alamos National Lab Quantum Computing Fellowship |